Reflective Attention on Enforcement of Ethical AI

Explore the interactive blueprint for an agentic AI with persistent memory, and the open-core scaffold to build your own.

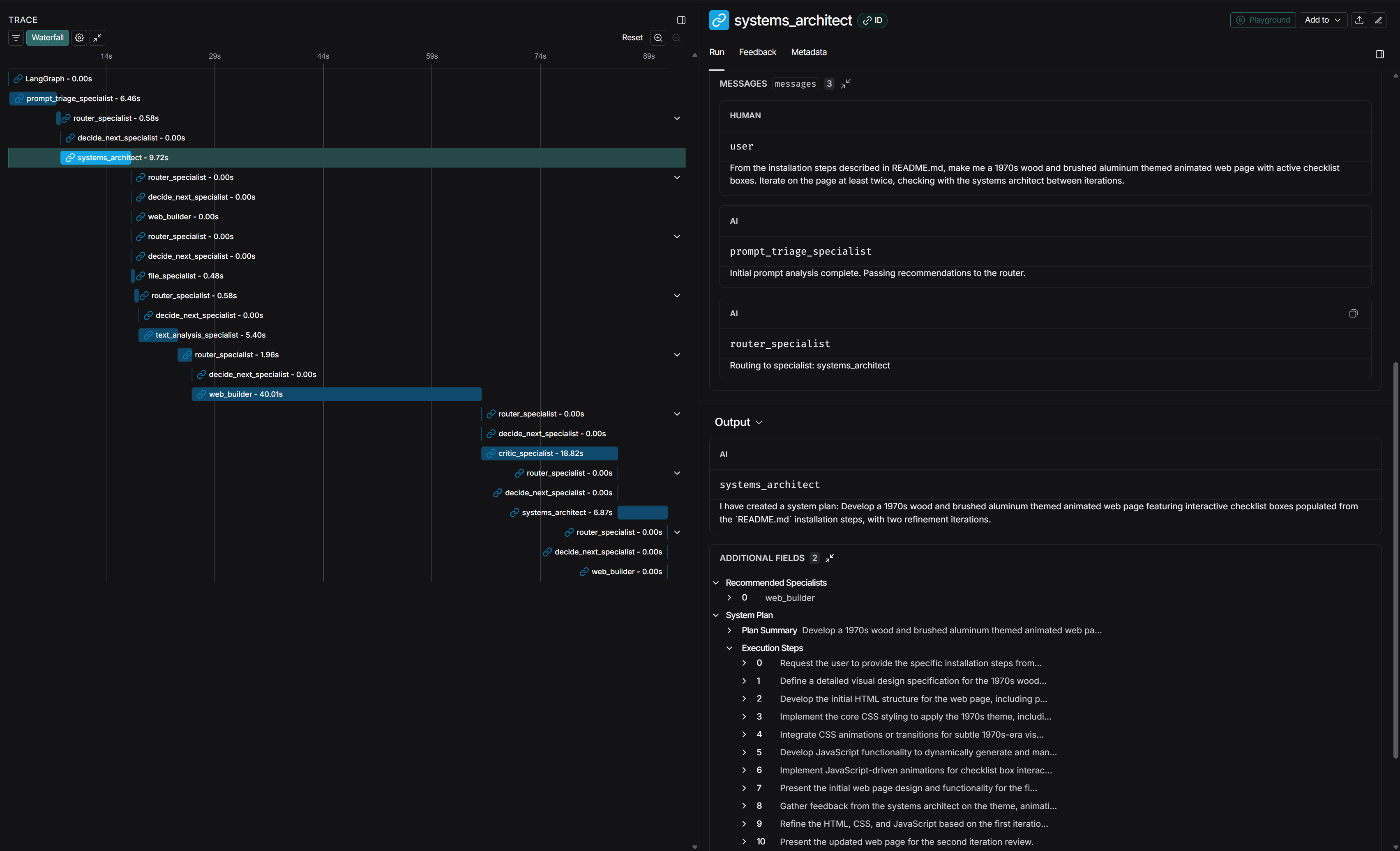

The Architect's Blueprint

A complete, phased construction plan for our cognitive architecture, presented as an interactive "tech tree." See the vision, from the first line of code to a fully realized, persistent agentic mind.

Project Bedrock

The atomic, testable engineering plan that translates the Blueprint's vision into concrete, verifiable workstreams for building a resilient agentic architecture.

5-Minute Developer Briefing

A complete technical rundown of the scaffold's production-ready architecture, mission, and how to get started building robust, modular, and scalable multi-agent systems.

90-Second Pitch

A concise, audio-only overview of the project's value proposition and core philosophy.

Roadmap Vision

The vision for governed resilience, as detailed in the Project Bedrock roadmap.

The Diplomatic Process

A multi-agent synthesis framework using dialectical negotiation to prevent model drift and the Whispering Gallery Effect.

View on GitHub

Get the source code, read the documentation, and start building with the scaffold today.

The Definitive Guide

The canonical guide to the Whispering Gallery Effect (WGE), a critical failure mode in LLMs that leads to conversational decay.

Fix for Everyday Users

A simple, practical 3-step guide for any AI user to get conversations back on track when "AI Brain Fog" sets in.

Risk for Professionals

An analysis of the systemic risks WGE poses in high-stakes professional domains like legal tech, finance, and compliance.

Contact & Feedback

For inquiries, feedback, or to report issues, please reach out via email.

reflectiveattention@gmail.com